自人工智慧聊天機器人興起以來,谷歌的 Gemini 已成為推動智慧系統發展的最強大的角色之一。除了在會話方面的優勢外,Gemini 還為計算機視覺帶來了實際的可能性,使機器能夠看到、解釋和描述周圍的世界。

本教學指南將指導您如何利用 Google Gemini 進行計算機視覺,包括如何設定環境、傳送帶有說明的影像,以及如何解釋模型的輸出以進行物件檢測、標題生成和 OCR。我們還將介紹資料註釋工具(如用於 YOLO 的工具),以便為自定義訓練場景提供上下文。

什麼是Google Gemini?

Google Gemini 是一系列人工智慧模型,用於處理多種資料型別,如文字、影像、音訊和程式碼。這意味著它們可以處理涉及理解圖片和文字的任務。

Google Gemini 2.5 Pro功能

- 多模態輸入:它可在單個請求中接受文字和影像的組合。

- 推理:該模型可分析輸入資訊,執行識別物體或描述場景等任務。

- 指令跟蹤:它能響應文字指令(提示),從而指導其分析影像。

這些功能使開發人員能夠透過應用程式介面將 Gemini 用於視覺相關任務,而無需為每項工作單獨訓練一個模型。

資料註釋的作用:YOLO註釋器

雖然 Gemini 模型為這些計算機視覺任務提供了強大的零鏡頭或少鏡頭功能,但要建立高度專業化的計算機視覺模型,需要在針對特定問題定製的資料集上進行訓練。這就是資料註釋的關鍵所在,尤其是對於訓練自定義物件檢測器等監督學習任務而言。

YOLO 註釋器(通常指與 YOLO 格式相容的工具,如 Labeling、CVAT 或 Roboflow)旨在建立標註資料集。

什麼是資料註釋?

Source: Link

對於物體檢測,標註包括在影像中每個感興趣的物體周圍繪製邊框,並指定一個類別標籤(如 “汽車”、“人”、“狗”)。這些註釋資料會告訴模型在訓練過程中需要查詢的內容和位置。

註釋工具(如YOLO註釋器)的主要特點

- 使用者介面:它們提供圖形介面,允許使用者載入影像、繪製方框(或多邊形、關鍵點等)並高效地分配標籤。

- 格式相容性:專為 YOLO 模型設計的工具會將註釋儲存為 YOLO 訓練指令碼所期望的特定文字檔案格式(通常是每幅影像一個 .txt 檔案,包含類索引和歸一化邊界框座標)。

- 高效功能:許多工具都包含熱鍵、自動儲存等功能,有時還提供模型輔助標註功能,以加快耗時的標註過程。批次處理可更有效地處理大型影像集。

- 整合:使用 YOLO 等標準格式可確保註釋資料能夠輕鬆地與包括 Ultralytics YOLO 在內的流行訓練框架配合使用。

雖然 Google Gemini for Computer Vision 無需事先註釋即可檢測一般物件,但如果您需要一個模型來檢測非常具體的自定義物件(例如,獨特型別的工業裝置、特定的產品缺陷),您可能需要收集影像並使用 YOLO 註釋器等工具對其進行註釋,以訓練專用的 YOLO 模型。

程式碼實現-用於計算機視覺的Google Gemini

首先,您需要安裝必要的軟體庫。

第 1 步:安裝先決條件

1. 安裝軟體庫

在終端執行此命令:

!uv pip install -U -q google-genai ultralytics

該命令安裝google-genai庫,以便與 Gemini API 和 ultralytics 庫通訊,後者包含處理圖片和在圖片上繪圖的有用函式。

2. 匯入模組

在 Python Notebook 中新增這些行:

import json import cv2 import ultralytics from google import genai from google.genai import types from PIL import Image from ultralytics.utils.downloads import safe_download from ultralytics.utils.plotting import Annotator, colors ultralytics.checks()

這些程式碼匯入了用於讀取影像(cv2、PIL)、處理 JSON 資料(json)、與 API 互動(google.generativeai)和實用功能(ultralytics)等任務的庫。

3. 配置API金鑰

使用 Google AI API 金鑰初始化客戶端。

# Replace "your_api_key" with your actual key # Use GenerativeModel for newer versions of the library # Initialize the Gemini client with your API key client = genai.Client(api_key=”your_api_key”)

這一步為指令碼傳送驗證請求做好準備。

第 2 步:與Gemini互動的函式

建立一個向模型傳送請求的函式。該函式接收影像和文字提示,並返回模型的文字輸出。

def inference(image, prompt, temp=0.5): """ Performs inference using Google Gemini 2.5 Pro Experimental model. Args: image (str or genai.types.Blob): The image input, either as a base64-encoded string or Blob object. prompt (str): A text prompt to guide the model's response. temp (float, optional): Sampling temperature for response randomness. Default is 0.5. Returns: str: The text response generated by the Gemini model based on the prompt and image. """ response = client.models.generate_content( model="gemini-2.5-pro-exp-03-25", contents=[prompt, image], # Provide both the text prompt and image as input config=types.GenerateContentConfig( temperature=temp, # Controls creativity vs. determinism in output ), ) return response.text # Return the generated textual response

說明

- 此函式將影像和您的文字指令(提示)傳送到 model_client 中指定的 Gemini 模型。

- 溫度設定 (temp) 會影響輸出的隨機性;數值越低,結果越可預測。

第 3 步:準備影像資料

在向模型傳送影像之前,需要正確載入影像。如果需要,該函式將下載影像、讀取影像、轉換顏色格式並返回PIL 影像物件及其尺寸。

def read_image(filename):

image_name = safe_download(filename)

# Read image with opencv

image = cv2.cvtColor(cv2.imread(f"/content/{image_name}"), cv2.COLOR_BGR2RGB)

# Extract width and height

h, w = image.shape[:2]

# # Read the image using OpenCV and convert it into the PIL format

return Image.fromarray(image), w, h

說明

- 此函式使用 OpenCV (cv2) 讀取影像檔案。

- 它將影像顏色順序轉換為標準的 RGB。

- 它將影像返回為適合推理函式的 PIL 物件,並返回其寬度和高度。

第 4 步:結果格式化

def clean_results(results):

"""Clean the results for visualization."""

return results.strip().removeprefix("```json").removesuffix("```").strip()

該函式將結果格式化為 JSON 格式。

任務 1:物件檢測

Gemini 可根據您的文字指令查詢影像中的物體並報告其位置(邊界框)。

# Define the text prompt

prompt = """

Detect the 2d bounding boxes of objects in image.

"""

# Fixed, plotting function depends on this.

output_prompt = "Return just box_2d and labels, no additional text."

image, w, h = read_image("https://media-cldnry.s-nbcnews.com/image/upload/t_fit-1000w,f_auto,q_auto:best/newscms/2019_02/2706861/190107-messy-desk-stock-cs-910a.jpg") # Read img, extract width, height

results = inference(image, prompt + output_prompt) # Perform inference

cln_results = json.loads(clean_results(results)) # Clean results, list convert

annotator = Annotator(image) # initialize Ultralytics annotator

for idx, item in enumerate(cln_results):

# By default, gemini model return output with y coordinates first.

# Scale normalized box coordinates (0–1000) to image dimensions

y1, x1, y2, x2 = item["box_2d"] # bbox post processing,

y1 = y1 / 1000 * h

x1 = x1 / 1000 * w

y2 = y2 / 1000 * h

x2 = x2 / 1000 * w

if x1 > x2:

x1, x2 = x2, x1 # Swap x-coordinates if needed

if y1 > y2:

y1, y2 = y2, y1 # Swap y-coordinates if needed

annotator.box_label([x1, y1, x2, y2], label=item["label"], color=colors(idx, True))

Image.fromarray(annotator.result()) # display the output

來源圖片:連結

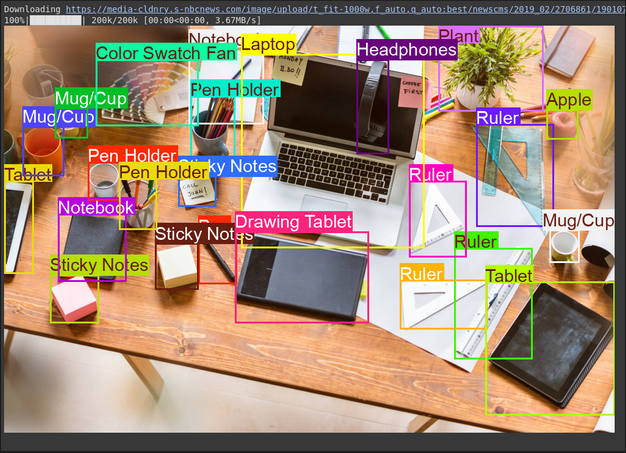

輸出

說明

- 該提示告訴模型要查詢什麼以及如何格式化輸出(JSON)。

- 它使用影像的寬度 (w) 和高度 (h) 將歸一化方框座標(0-1000)轉換為畫素座標。

- 註釋器工具在影像副本上繪製方框和標籤

任務 2:測試推理能力

透過 Gemini 模型,您可以使用高階推理來處理複雜的任務,這種推理能夠理解上下文並提供更精確的結果。

# Define the text prompt

prompt = """

Detect the 2d bounding box around:

highlight the area of morning light +

PC on table

potted plant

coffee cup on table

"""

# Fixed, plotting function depends on this.

output_prompt = "Return just box_2d and labels, no additional text."

image, w, h = read_image("https://thumbs.dreamstime.com/b/modern-office-workspace-laptop-coffee-cup-cityscape-sunrise-sleek-desk-featuring-stationery-organized-neatly-city-345762953.jpg") # Read image and extract width, height

results = inference(image, prompt + output_prompt)

# Clean the results and load results in list format

cln_results = json.loads(clean_results(results))

annotator = Annotator(image) # initialize Ultralytics annotator

for idx, item in enumerate(cln_results):

# By default, gemini model return output with y coordinates first.

# Scale normalized box coordinates (0–1000) to image dimensions

y1, x1, y2, x2 = item["box_2d"] # bbox post processing,

y1 = y1 / 1000 * h

x1 = x1 / 1000 * w

y2 = y2 / 1000 * h

x2 = x2 / 1000 * w

if x1 > x2:

x1, x2 = x2, x1 # Swap x-coordinates if needed

if y1 > y2:

y1, y2 = y2, y1 # Swap y-coordinates if needed

annotator.box_label([x1, y1, x2, y2], label=item["label"], color=colors(idx, True))

Image.fromarray(annotator.result()) # display the output

來源圖片:連結

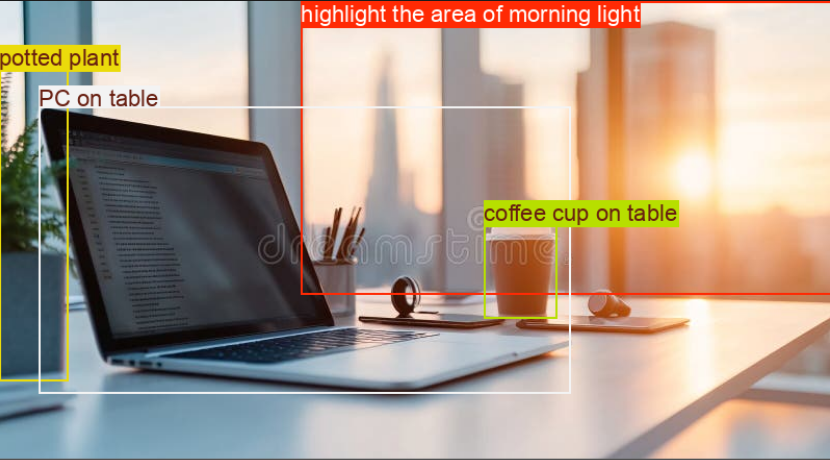

輸出

說明

- 此程式碼塊包含一個複雜的提示,用於測試模型的推理能力。

- 它使用影像的寬(w)和高(h)將歸一化方框座標(0-1000)轉換為畫素座標。

- 註釋器工具會在影像副本上繪製方框和標籤。

任務 3:影像說明文字

Gemini 可以為影像建立文字說明。

# Define the text prompt

prompt = """

What's inside the image, generate a detailed captioning in the form of short

story, Make 4-5 lines and start each sentence on a new line.

"""

image, _, _ = read_image("https://cdn.britannica.com/61/93061-050-99147DCE/Statue-of-Liberty-Island-New-York-Bay.jpg") # Read image and extract width, height

plt.imshow(image)

plt.axis('off') # Hide axes

plt.show()

print(inference(image, prompt)) # Display the results

來源圖片:連結

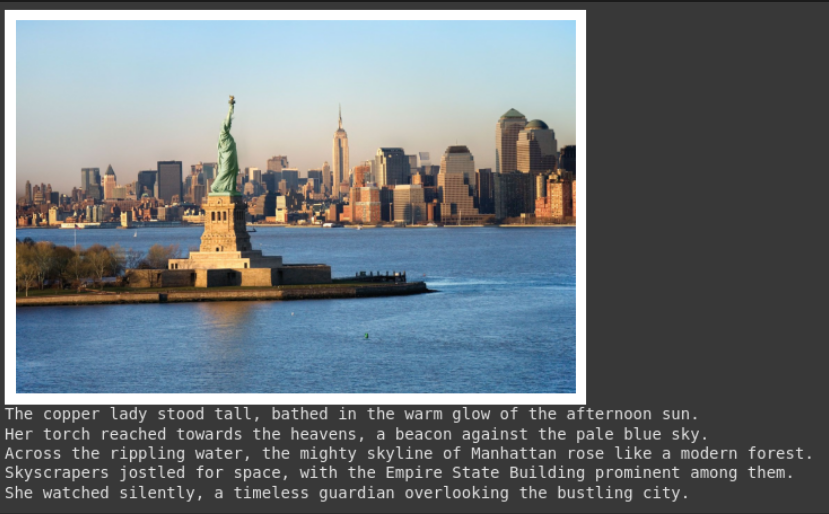

輸出

說明

- 該提示要求輸入特定樣式的說明(敘述式、4 行、新行)。

- 輸出中將顯示所提供的圖片。

- 函式將返回生成的文字。這對建立輔助文字或摘要非常有用。

任務 4:光學字元識別(OCR)

Gemini 可以讀取影像中的文字,並告訴您在哪裡找到這些文字。

# Define the text prompt

prompt = """

Extract the text from the image

"""

# Fixed, plotting function depends on this.

output_prompt = """

Return just box_2d which will be location of detected text areas + label"""

image, w, h = read_image("https://cdn.mos.cms.futurecdn.net/4sUeciYBZHaLoMa5KiYw7h-1200-80.jpg") # Read image and extract width, height

results = inference(image, prompt + output_prompt)

# Clean the results and load results in list format

cln_results = json.loads(clean_results(results))

print()

annotator = Annotator(image) # initialize Ultralytics annotator

for idx, item in enumerate(cln_results):

# By default, gemini model return output with y coordinates first.

# Scale normalized box coordinates (0–1000) to image dimensions

y1, x1, y2, x2 = item["box_2d"] # bbox post processing,

y1 = y1 / 1000 * h

x1 = x1 / 1000 * w

y2 = y2 / 1000 * h

x2 = x2 / 1000 * w

if x1 > x2:

x1, x2 = x2, x1 # Swap x-coordinates if needed

if y1 > y2:

y1, y2 = y2, y1 # Swap y-coordinates if needed

annotator.box_label([x1, y1, x2, y2], label=item["label"], color=colors(idx, True))

Image.fromarray(annotator.result()) # display the output

來源圖片:連結

輸出

說明

- 該提示與物件檢測類似,但要求輸入文字(標籤)而非物件名稱。

- 程式碼會提取文字及其位置,列印文字並在影像上畫出方框。

- 這對於文件數字化或讀取照片中標誌或標籤的文字非常有用。

小結

透過簡單的 API 呼叫,Google Gemini for Computer Vision 可以輕鬆完成物件檢測、影像字幕和 OCR 等任務。透過傳送影像和清晰的文字說明,你可以引導模型理解並獲得可用的即時結果。

雖然 Gemini 非常適合通用任務或快速實驗,但它並不總是最適合高度專業化的用例。假設你正在處理小眾物件,或者需要更嚴格地控制精度。在這種情況下,傳統的方法仍然有效:收集資料集,使用 YOLO 標籤器等工具對其進行標註,然後根據自己的需要訓練一個自定義模型。

評論留言